Machine learning is a subfield AI that focuses on the development of algorithms and models that enable computer systems to learn from data and make predictions or decisions without explicit programming. It has gained immense popularity and has become a key tool for solving complex problems and extracting meaningful insights from vast amounts of data.

In this topic, we will explore the fascinating world of machine learning and delve into its various aspects. We will cover both supervised and unsupervised learning techniques, their applications, and the fundamental concepts behind them. By the end of this course, you will have a solid understanding of the key considerations involved in implementing machine learning solutions. You will be equipped with the knowledge and tools to apply machine learning algorithms to real-world problems and make informed decisions based on the insights derived from data.

Watch the video below to find out more about the basics of machine learning:

Supervised learning is a machine learning approach in which an algorithm learns from labelled training data to make predictions or decisions. In this type of learning, the training data consists of input features (also known as independent variables) and their corresponding target values or labels (also known as dependent variables). The goal of supervised learning is to train a model that can accurately predict the target values for new, unseen data.

Types of learning systems in supervised learning

In supervised learning, various types of learning systems or algorithms can be used to train models based on labelled data. Here are some common types of learning systems for supervised learning:

hese are just a few examples of learning systems used in supervised learning. Each algorithm has its own strengths, weaknesses, and suitability for different types of data and problem domains. The choice of the learning system depends on factors such as the complexity of the problem, the nature of the data, the interpretability requirements, and the computational resources available.

Categories of supervised learning: classification and regression

In supervised learning, the two main categories are classification and regression. Let's take a closer look at each category:

Classification

Classification is a type of supervised learning where the goal is to assign input data to a specific class or category. In classification tasks, the target variable or label is categorical. The algorithm learns from labelled training data and aims to generalize its knowledge to accurately classify new, unseen data. Here are a few examples of classification problems:

- Email spam detection: Classifying emails as spam or non-spam based on features such as subject, sender, and content.

- Image classification: Identifying objects or scenes in images, such as classifying whether an image contains a cat or a dog.

- Disease diagnosis: Predicting the presence or absence of a disease based on medical test results and patient symptoms.

- Sentiment analysis: Determining the sentiment (positive, negative, or neutral) expressed in a piece of text, such as classifying customer reviews as positive or negative.

Regression

Regression is another category of supervised learning that deals with predicting a continuous numerical value as the output. In regression tasks, the target variable is continuous, and the algorithm learns from labelled training data to establish a relationship between input features and the predicted output. Some examples of regression problems include:

- House price prediction: Estimating the price of a house based on features such as size, number of bedrooms, location, etc.

- Stock market forecasting: Predicting the future value of a stock based on historical price data and other relevant factors.

- Demand forecasting: Estimating the future demand for a product based on historical sales data and market variables.

- Weather prediction: Predicting temperature, rainfall, or other weather parameters based on historical weather patterns and atmospheric data.

In both classification and regression tasks, the supervised learning algorithm aims to learn a mapping or decision boundary that separates or relates the input features to the target variable. The quality of the training data, the choice of the algorithm, and the appropriate evaluation metrics play crucial roles in developing accurate and reliable models.

Variations and extensions

It's important to note that there are also variations and extensions of supervised learning, some of which include:

- multi-class classification, where there are more than two classes

- ordinal regression, where the target variable has an ordered structure

- time series forecasting, where the input data is sequential in nature.

These variations cater to specific problem scenarios and allow for more nuanced modelling approaches.

Watch the video below to find out more about classification and regression.

Basic steps involved in supervised machine learning

Supervised machine learning involves several basic steps to develop and train a model using labelled data. Here are the key steps typically involved in supervised machine learning:

- Data collection: The first step is to gather a dataset that contains labelled examples of input data along with their corresponding target variables or class labels. The quality and representativeness of the data are crucial for building an accurate model.

- Data preprocessing: Once the data is collected, it needs to be preprocessed (cleaned) to ensure its quality and suitability for training. This step may involve handling missing values, removing outliers, normalizing or scaling the data, and encoding categorical variables into a numerical format.

- Feature selection/extraction: In this step, the relevant features or attributes from the dataset are selected or extracted to represent the input data. Feature selection helps reduce dimensionality and focus on the most informative attributes. Feature extraction techniques, such as principal component analysis (PCA) or feature engineering, can also be applied to create new features that capture meaningful patterns in the data.

- Splitting the dataset: The labelled dataset is typically divided into two subsets: a training set and a testing set. A common split is 70-80% for training and 20-30% for testing, but this can vary depending on the size and characteristics of the dataset.

- Model selection: The next step involves selecting an appropriate machine-learning algorithm or model for the task at hand. The choice of the model depends on factors such as the problem type (classification or regression), the complexity of the data, the interpretability requirements, and the available computational resources. It is important to consider the strengths and limitations of different models and select the one that is best suited for the problem.

- Model training: The selected model is trained using the labelled examples in the training set. During training, the model learns the underlying patterns and relationships between the input features and the target variable by adjusting its internal parameters. This process involves an optimization algorithm that minimizes the difference between the predicted outputs and the actual labels.

- Model evaluation: Once the model is trained, it is evaluated using the testing set. Various evaluation metrics are used depending on the problem type, such as accuracy, precision, recall, F1-score for classification, or mean squared error, and root mean squared error for regression. The evaluation provides insights into how well the model generalizes to new, unseen data and helps identify potential issues like overfitting or underfitting.

- Model tuning: If the model's performance is not satisfactory, further steps can be taken to improve it. This can involve fine-tuning the model's hyperparameters (e.g., learning rate, regularization strength) or exploring different variations of the model architecture. Techniques like cross-validation or grid search can be employed to find the optimal set of hyperparameters.

- Model deployment: Once the model has been trained and evaluated to meet the desired performance criteria, it can be deployed for making predictions on new, unseen data. The model is used to generate predictions or classifications based on the input features, providing valuable insights and aiding decision-making processes.

It's important to note that the above steps provide a high-level overview of the supervised machine-learning process. The actual implementation may involve iterative cycles of experimentation, refinement, and evaluation to develop an effective and accurate model.

Real-world examples of supervised learning

Supervised learning has numerous real-world applications across various domains. Here are some examples of how supervised learning is used in practice:

Email spam filtering:

Supervised learning algorithms are commonly employed in email spam filtering systems. The algorithm is trained on a labelled dataset of emails, where each email is classified as either spam or non-spam. The model learns to distinguish between the two classes based on features such as keywords, email headers, and message content. Once trained, the model can accurately classify incoming emails as spam or legitimate, helping users manage their email efficiently.

Medical diagnosis

Supervised learning plays a vital role in medical diagnosis, where models are trained to predict and identify diseases based on patient symptoms, medical test results, and other relevant factors. By training on historical patient data, the model can learn patterns and associations between symptoms and diseases, aiding doctors in making accurate diagnoses and suggesting appropriate treatments.

Image recognition

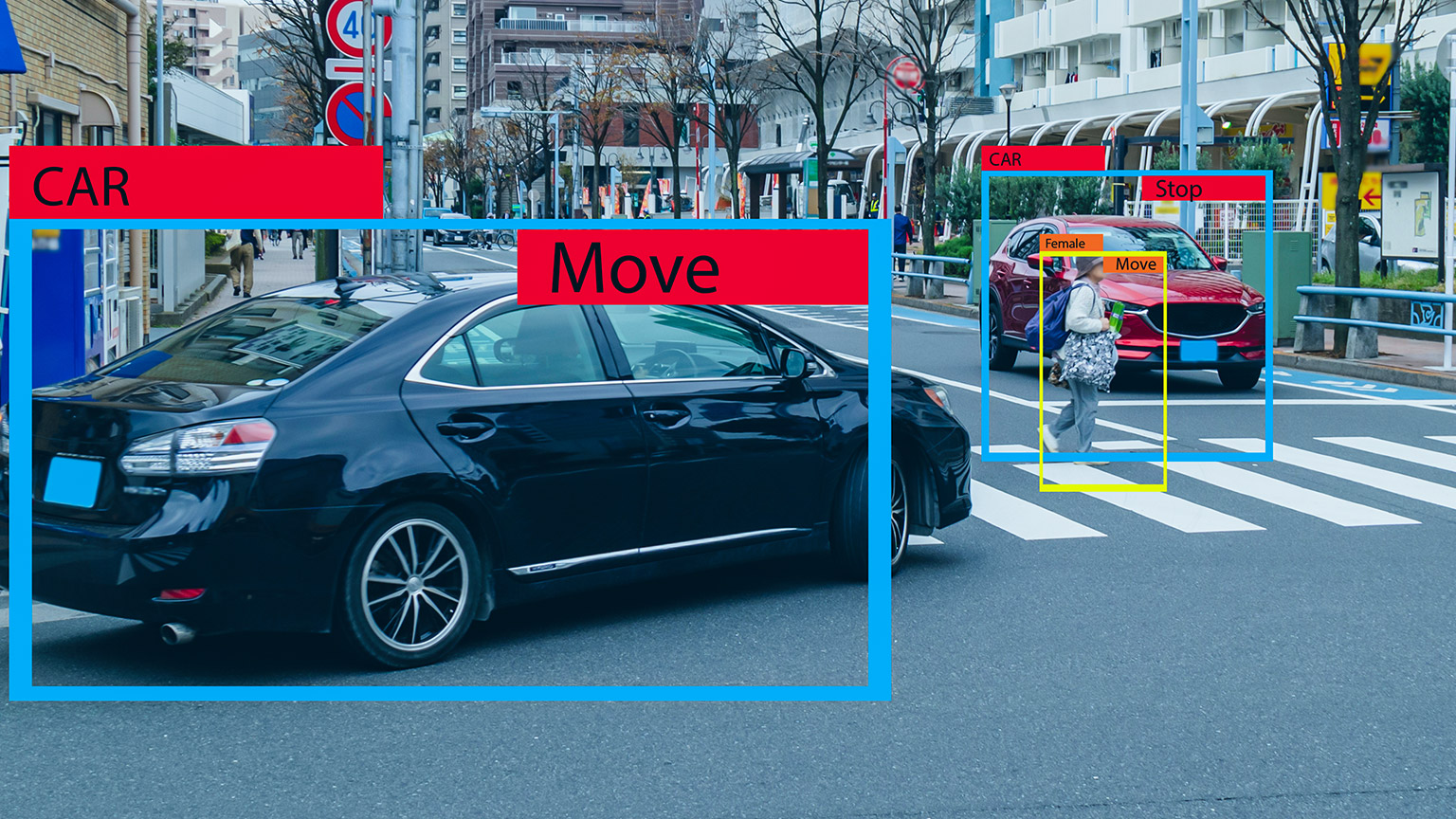

Image recognition applications rely on supervised learning to classify or recognize objects within images. For instance, facial recognition systems leverage supervised learning to identify individuals from images or video footage, enabling various applications such as biometric authentication and surveillance.

Credit scoring

In the financial sector, supervised learning is widely used for credit scoring, where the goal is to assess the creditworthiness of individuals or businesses. By training on historical credit data, models can predict the likelihood of a borrower defaulting on a loan or making timely payments. This helps financial institutions make informed decisions when approving or denying credit applications.

Natural language processing (NLP)

NLP applications heavily rely on supervised learning techniques. For example, sentiment analysis algorithms are trained on labelled text data to classify the sentiment of customer reviews, social media posts, or news articles as positive, negative, or neutral. Supervised learning is also used for text classification tasks, such as spam detection, topic classification, and document categorization.

Watch the video below to see some examples of supervised learning.

Unsupervised learning is a machine learning approach where the objective is to discover patterns, structures, or relationships in a dataset without any explicit target variable or labelled data. In unsupervised learning, the algorithms work with unlabelled data and aim to uncover hidden insights and structures within the data.

Clustering

Clustering is a common task in unsupervised learning where the algorithm groups similar data points together based on their inherent characteristics or similarities. The goal is to identify natural clusters or subgroups within the data, where the data points within a cluster share high similarity or proximity, while data points from different clusters are dissimilar.

Various clustering algorithms, such as K-means, hierarchical clustering and DBSCAN, can be used to partition the data into meaningful groups, allowing for pattern discovery and data segmentation. The algorithms consider factors such as distances, densities, or connectivity between data points to create the clusters. The resulting clusters can help in data exploration, segmentation, pattern recognition, and recommendation systems.

Learning Activity

Find an example of AI use that highlights one of the ethical and societal considerations described above.

Research the terms K-means, hierarchical clustering and DBSCAN to start building your knowledge.

Dimensionality reduction

Dimensionality reduction techniques aim to reduce the number of features or variables in a dataset while retaining the most important information. These methods help to overcome the curse of dimensionality and simplify complex datasets. Principal component analysis (PCA), t-SNE (t-Distributed stochastic neighbour embedding), and autoencoders are examples of dimensionality reduction techniques commonly used in unsupervised learning. They transform the data into a lower-dimensional space, making it easier to visualize, analyse, and interpret.

Learning Activity

Find an example of AI use that highlights one of the ethical and societal considerations described above.

Research the terms principal component analysis and t-SNE to start building your knowledge.

Anomaly detection

Anomaly detection is the identification of rare or unusual instances within a dataset that deviate significantly from the norm. Unsupervised learning algorithms can learn the underlying distribution of the data and flag instances that exhibit unusual behaviour. This can be useful in detecting fraudulent activities, network intrusions, or equipment failures. Techniques such as Gaussian mixture models (GMM), one-class SVM (support vector machines), and isolation forests are commonly used for anomaly detection.

Learning Activity

Research the terms Gaussian mixture model, one-class SVM and isolation forests to start building your knowledge.

Association refers to the process of discovering interesting relationships, patterns, or associations among items or variables in a dataset. It aims to find co-occurrence or dependency relationships between different items or variables without considering any specific target variable. Association analysis is commonly used in market basket analysis, where the goal is to identify which items are frequently purchased together.

Association rules are extracted from the data, which express the likelihood of certain items being present or absent based on the presence of other items. These rules are typically represented in the form of "if-then" statements, where the antecedent represents the items present, and the consequent represents the items that are likely to be present. The strength of an association rule is measured using metrics such as support, confidence, and lift.

The main difference between supervised learning and unsupervised learning lies in the presence or absence of labelled data and the nature of the learning tasks.

| Supervised learning | Unsupervised learning | |

|---|---|---|

| Labelled data |

Supervised learning requires labelled data, where each data instance is associated with a known target variable or class label. The training data includes both input features and corresponding target values. |

Unsupervised learning works with unlabelled data, where there are no predefined target variables or class labels associated with the input data. The training data consists of only input features. |

| Learning task |

The goal of supervised learning is to learn a mapping function that can predict the target variable for new, unseen data instances. It involves training a model on labelled data to generalize and make accurate predictions. |

The objective of unsupervised learning is to discover patterns, structures, or relationships within the data without any explicit guidance. It focuses on exploring the inherent structure of the data and finding meaningful representations or clusters. |

| Use |

Supervised learning is used when there is a clear distinction between input features and target variables. It is suitable for tasks such as classification (assigning categorical labels) and regression (predicting continuous values). It finds applications in areas like email spam filtering, image recognition, sentiment analysis, and medical diagnosis. |

Unsupervised learning is employed in scenarios where the goal is to explore and gain insights from the data, identify hidden patterns, or perform data preprocessing. It is suitable for tasks such as clustering (grouping similar instances), dimensionality reduction (extracting essential features), and anomaly detection (identifying outliers). Examples include customer segmentation, image clustering, data visualization, and recommendation systems. |

Choosing the appropriate learning approach depends on the availability of labelled data and the nature of the problem at hand. Supervised learning is suitable when labelled data is available, and the goal is to make predictions or classifications. Unsupervised learning is preferred in scenarios where labelled data is scarce or non-existent, and the objective is to uncover hidden structures or patterns within the data. Additionally, unsupervised learning techniques can be used as preprocessing steps to extract useful features for subsequent supervised learning tasks.

In some cases, a combination of both supervised and unsupervised learning techniques can be employed to tackle complex problems. For example, unsupervised learning can be used for dimensionality reduction or clustering, followed by supervised learning to train a model for classification or regression.

Watch the video below to find out more about supervised and unsupervised learning.

Learning Activity

Use information from this course and your own research to answer the following questions:

- What is the difference between supervised learning and unsupervised learning?

- What is the difference between classification and regression? Find some example applications.

- What is clustering and why it is important?

In the forum 'Learning Activity 3: Supervised vs. Unsupervised Learning' write approximately 50-100 words for each answer.