Broderick, P. C., & Blewitt, P. (2015). The life span: Human development for helping professionals (4th ed.). Pearson Education.

What importance do difficulties in getting along with others have for a 6-year-old youngster? Is she just “passing through a stage”? How do parenting practices affect a child’s developing self-concept? How much freedom should be given to adolescents? Does the experience of sex discrimination affect a teenage girl’s identity formation? What implications do social problems with friends and coworkers suggest for a 22-year-old male? Does stereotype threat (such as expecting to be judged on the basis of race) alter the course of development? How significant is it for a married couple to experience increased conflicts following the births of their children? Does divorce cause lasting emotional damage to the children involved in a family breakup? What kind of day care experience is best for young children? Do we normally lose many intellectual abilities as we age? What factors enable a person to over-come early unfavorable circumstances and become a successful, healthy adult?

These intriguing questions represent a sampling of the kinds of topics that developmental scientists tackle. Their goal is to understand life span development: human behavioral change from conception to death. “Behavioral” change refers broadly to change in both observable activity (e.g., from crawling to walking) and mental activity (e.g., from disorganized to logical thinking). More specifically, developmental science seeks to:

- describe people’s behavioral characteristics at different ages,

- identify how people are likely to respond to life’s experiences at different ages,

- formulate theories that explain how and why we see the typical characteristics and responses that we do, and

- understand what factors contribute to developmental differences from one person to another.

Using an array of scientific tools designed to obtain objective (unbiased) information, developmentalists make careful observations and measurements, and they test theoretical explanations empirically. See the Appendix for A Practitioner’s Guide to the Methods of Developmental Science.

Developmental science is not a remote or esoteric body of knowledge. Rather, it has much to offer the helping professional both professionally and personally.

As you study developmental science, you will build a knowledge base of information about age-related behaviors and about causal theories that help organize and make sense of these behaviors. These tools will help you better understand client concerns that are rooted in shared human experience. And when you think about clients’ problems from a developmental perspective, you will increase the range of problem solving strategies that you can offer. Finally, studying development can facilitate personal growth by providing a foundation for reflecting on your own life.

Reflection and Action

Despite strong support for a comprehensive academic grounding in scientific developmental knowledge for helping professionals (e.g., Van Hesteren & Ivey, 1990), there has been a somewhat uneasy alliance between practitioners, such as mental health professionals, and those with a more empirical bent, such as behavioral scientists. The clinical fields have depended on research from developmental psychology to inform their practice. Yet in the past, overreliance on traditional experimental methodologies sometimes resulted in researchers’ neglect of important issues that could not be studied using these rigorous methods (Hetherington, 1998). Consequently, there was a tendency for clinicians to perceive some behavioral science literature as irrelevant to real-world concerns (Turner, 1986). Clearly, the gap between science and practice is not unique to the mental health professions. Medicine, education, and law have all struggled with the problems involved in preparing students to grapple with the complex demands of the workplace. Contemporary debate on this issue has led to the development of serious alternative paradigms for the training of practitioners.

One of the most promising of these alternatives for helping professionals is the concept of reflective practice. The idea of “reflectivity” derives from Dewey’s (1933/ 1998) view of education, which emphasized careful consideration of one’s beliefs and forms of knowledge as a precursor to practice. Donald Schon (1987), a modern pioneer in the field of reflective practice, describes the problem this way:

In the varied topography of professional practice, there is a high, hard ground overlooking a swamp. On the high ground, manageable problems lend themselves to solution through the application of research-based theory and technique. In the swampy lowland, messy confusing problems defy technical solutions. The irony of this situation is that the problems of the high ground tend to be relatively unimportant to individuals or society at large, however great their technical interest may be, while in the swamp lie the problems of greatest human concern. (p. 3)

The Gap Between Science and Practice

Traditionally, the modern, university-based educational process has been driven by the belief that problems can be solved best by applying objective, technical, or scientific in-formation amassed from laboratory investigations. Implicit in this assumption is that human nature operates according to universal principles that, if known and under-stood, will enable us to predict behavior. For example, if I understand the principles of conditioning and reinforcement, I can apply a contingency contract to modify my client’s inappropriate behavior. Postmodern critics have pointed out the many difficulties associated with this approach. Sometimes a “problem” behavior is related to, or maintained by, neurological, systemic, or cultural conditions. Sometimes the very existence of a problem may be a cultural construction. Unless a problem is viewed within its larger context, a problem-solving strategy may prove ineffective.

Most of the situations helpers face are confusing, complex, ill-defined, and often unresponsive to the application of a simple, specific set of scientific principles. Thus, the training of helping professionals often involves a “dual curriculum.”

The first is more formal and may be presented as a conglomeration of research-based facts, whereas the second, often learned in a practicum, field placement or first job, covers the curriculum of “what is really done” when working with clients. The antidote to this dichotomous pedagogy, Schon (1987) and his followers suggest, is reflective practice. This is a creative method of thinking about practice in which the helper masters the knowledge and skills base pertinent to the profession but is encouraged to go beyond rote technical applications to generate new kinds of understanding and strategies of action. Rather than relying solely on objective technical applications to determine ways of operating in a given situation, the reflective practitioner constructs solutions to problems by engaging in personal hypothesis generating and hypothesis testing.

How can one use the knowledge of develop-mental science in a meaningful and reflective way? What place does it have in the process of reflective construction? Consideration of another important line of research, namely, that of characteristics of expert problem solvers, will help us answer this question. Research studies on expert–novice differences in many areas such as teaching, science, and athletics all support the contention that experts have a great store of knowledge and skill in a particular area. Expertise is domain specific. When compared to novices in any given field, experts possess well-organized and integrated stores of information that they draw on, almost automatically, when faced with novel challenges. Because this knowledge is well practiced, truly a “working body” of information, retrieval is relatively easy (Lewandowsky & Thomas, 2009). Progress in problem solving is closely self-monitored. Problems are analyzed and broken down into smaller units, which can be handled more efficiently.

If we apply this information to the reflective practice model, we can see some connections. One core condition of reflective practice is that practitioners use theory as a “partial lens through which to consider a problem” (Nelson & Neufelt, 1998). Practitioners also use another partial lens: their professional and other life experience. In reflective practice, theory-driven hypotheses about client and system problems are generated and tested for goodness of fit. A rich supply of problem-solving strategies depends on a deep understanding of and thorough grounding in fundamental knowledge germane to the field. Notice that there is a sequence to reflective practice. Schon (1987), for example, argues against putting the cart before the horse. He states that true reflectivity depends on the ability to “recognize and apply standard rules, facts and operations; then to reason from general rules to problematic cases in ways characteristic of the profession; and only then to develop and test new forms of understanding and action where familiar categories and ways of thinking fail” (p. 40). In other words, background knowledge is important, but it is most useful in a dynamic interaction with contextual applications (Hoshman & Polkinghorne, 1992). A working knowledge of human development supplies the helping professional with a firm base from which to proceed.

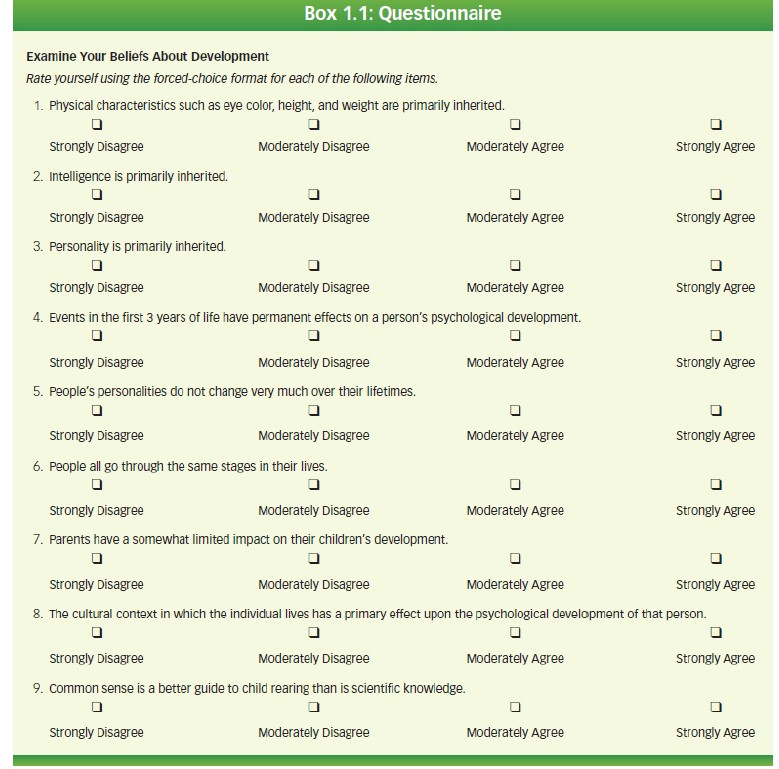

Given the relevance of background know-ledge to expertise in helping and to reflective practice, we hope we have made a sufficiently convincing case for the study of developmental science. However, it is obvious that students approaching this study are not “blank slates.” You already have many ideas and theories about the ways that people grow and change. These implicit theories have been constructed over time, partly from personal experience, observation, and your own cultural “take” on situations. Dweck and her colleagues have demonstrated that reliably different interpretations of situations can be predicted based on individual differences in people’s implicit beliefs about certain human attributes, such as intelligence or personality (see Dweck & Elliott-Moskwa, 2010). Take the case of intelligence. If you happen to hold the implicit belief that a person’s intellectual capacity can change and improve over time, you might be more inclined to take a skill-building approach to some presenting problem involving knowledge or ability. However, if you espouse the belief that a person’s intelligence is fixed and not amenable to incremental improvement, possibly because of genetic inheritance, you might be more likely to encourage a client to cope with and adjust to cognitive limitations. For helping professionals, the implicit theoretical lens that shapes their worldview can have important implications for their clients.

We are often reluctant to give up our personal theories even in the face of evidence that these theories are incorrect (Gardner, 1991; Kuhn, 2005). The best antidote to misapplication of our personal views is self-monitoring: being aware of what our theories are and recognizing that they are only one of a set of possibilities. (See Chapter 11 for a more extensive discussion of this issue.) Before we discuss some specific beliefs about the nature of development, take a few minutes to consider what you think about the questions posed in Box 1.1.

A Historical Perspective on Developmental Theories

Now that you have examined some of your own developmental assumptions, let’s consider the theoretical views that influence developmentalists, with special attention to how these views have evolved through the history of developmental science. Later, we will examine how different theoretical approaches might affect the helping process.

Like you, developmental scientists bring to their studies theoretical assumptions that help to structure their understanding of known facts. These assumptions also guide their research and shape how they interpret new findings. Scientists tend to develop theories that are consistent with their own cultural background and experience; no one operates in a vacuum. A core value of Western scientific method is a pursuit of objectivity, so that scientists are committed to continuously evaluating their theories in light of evidence. As a consequence, scientific theories change over time.

Throughout this text, you will be introduced to many developmental theories. Some are broad and sweeping in their coverage of whole areas of development, such as Freud’s theory of personality development (see Chapters 7 and 8) or Piaget’s theory of cognitive development (see Chapters 3, 6, and 9); some are narrower in scope, focusing on a particular issue, such as Vygotsky’s theory of the enculturation of knowledge (see Chapter 3) or Bowlby’s attachment theory (see Chapters 4 and 12). You will see that newer theories usually incorporate empirically verified ideas from older theories, but they also reflect changing cultural needs, such as the need to understand successful aging in a longer-lived population. Newer theories also draw from advances in many disciplines, such as biology. Scientific theories of human development began to emerge in Europe and America in the 19th century. They had their roots in philosophical inquiry, in the emergence of biological science, and in the growth of mass education that accompanied industrialization. Through medieval times in European societies, children and adults of all ages seem to have been viewed and treated in very similar ways (Aries, 1960). Only infants and preschoolers were free of adult responsibilities, although they were not always given the special protections and nurture that they are today. At age 6 or 7, children took on adult roles, doing farmwork or learning a trade, often leaving their families to become apprentices. As the Industrial Revolution advanced, children worked beside adults in mines and factories. People generally seemed “indifferent to children’s special characteristics” (Crain, 2005, p. 2), and there was no real study of children or how they change.

The notion that children only gradually develop the cognitive and personality structures that will characterize them as adults first appeared in the writings of 17th- and 18th-century philosophers, such as John Locke in Great Britain and Jean-Jacques Rousseau in France. In the 19th century, Charles Darwin’s theory of the evolution of species and the growth of biological science helped to foster scholarly interest in children. The assumption grew that a close examination of how children change might help advance our understanding of the human species. Darwin himself introduced an early approach to child study, the “baby biography,” writing a richly detailed account of his young son’s daily changes in language and behavior. By the 18th and 19th centuries, the Industrial Revolution led to the growth of “middle-class” occupations (e.g., merchandizing) that required an academic education: training in reading, writing, and math. The need to educate large numbers of children sharpened the public’s interest in understanding how children change with age.

The first academic departments devoted to child study began to appear on American college campuses in the late 19th and early 20th centuries. The idea that development continues even in adulthood was a 20th-century concept and a natural outgrowth of the study of children. If children’s mental and behavioral processes change over time, perhaps such processes continue to evolve beyond childhood. Interest in adult development was also piqued by dramatic increases in life expectancy in the 19th and 20th centuries, as well as cultural changes in how people live. Instead of single households combining three or four generations of family members, grandparents and other relatives began to live apart from “nuclear families,” so that understanding the special needs and experiences of each age group took on greater importance. As you will see in the following discussion of classic developmenttal theories, in the 1950s Erik Erikson first proposed that personality development is a lifelong process, and by the 1960s cognitive theorists began to argue that adult thinking also changes systematically over time.

Most classic developmental theories emerged during the early and middle decades of the twentieth century. After you learn about some of the classic developmental theories, you will be introduced to contemporary theories. You will see that the newest theories integrate ideas from many classic theories, as well as from disciplines ranging from modern genetics, neuroscience, cognitive science, and psycholinguistics, to social and cultural psychology and anthropology. They acknowledge that human development is a complex synthesis of diverse processes at multiple levels of functioning. Because they embrace complexity, contemporary developmental theories can be especially useful to helping professionals. See the Timeline in Figure 1.1 for a graphic summary of some of the key theories and ideas in the history of develop-mental science.

1872 - Charles Darwin

Studied behavior of species using observational techniques; documented children's behavior in different cultures (e.g.. Darwin, 1965/1872).

1904 - G Stanley Hall

Studied children's 'normal development' using scientific methods and wrote an influential account of adolescent development (e.g., Hall, 1904).

1909 - Alfred Binet

Studied individual differences and developmental changes in children's intelligence (e.g., Binet & Simon, 1916).

1911 - John Dewey

Extended research on development to ecologically-valid settings like schools; emphasized the social context of development e.g., Dewey, 1911).

1920 - Sigmund Freud

Developed a theory of child development retrospectively from adult patients' psychoanalyses; emphasized unconscious processes and incorporated biological influences on development (e.g., Freud. 1920/1955).

1923 - Jean Piaget

Developed theory of children's cognition, morality and language development using observational and empirical research methods; popularized constructivist views of development.

1934 - Lev Vygotsky

Studied development of thought and language through the lens of culture; emphasized the social nature of learning; works originally in Russian became available in English in the 70s and 80s (e.g.. Vygotsky, 1934).

1950 - Eric Erikson

Developed a theory of development that spanned the entire lifespan; emphasized social contexts (e.g., Erikson, 1950/1963).

1967-1969 - John Bowlby and Mary Ainsworth

Developed a theory of attachment that was fundamental to the understanding of social and emotional development across the lifespan and across cultures (e.g.. Ainsworth, 1967; Bowlby, 1969/1982).

1979 - Urie Bronfenbrenner

Studied the contextual influences on development across cultures; popularized a multidimensional systems model of developmental processes (e.g., Bronfenbrenner, 1979).

1984 - Jerome Kagan/ Dante Cicchetti

Studied temperamental contributions to child development; explored questions of nature and nurture (e.g., Kagan, 1984).

Developed a theory that incorporated both normal and abnormal developmental trajectories called developmental psychopathology, studies the effects of maltreatment on children's development (e.g., Cicchetti, 1984).

1999 - Michael Meaney and Moshe Szyf

Study the molecular processes involved in epigenetic transmission: study the effect of early experience on gene expression (e.g., Meaney, 2001; Ramchandani, Bhattacharya, Cervoni, & Szyf, 1999).

2002 - Michael Rutter

Studies the interaction of nature and nurture and its implication for public policy (e.g., Rutter, 2002).

Emphasizing Discontinuity: Classic Stage Theories

Some of the most influential early theories of development described human change as occurring in stages. Imagine a girl when she is 4 months old and then again when she is 4 years old. If your sense is that these two versions of the same child are fundamentally different in kind, with different intellectual capacities, different emotional structures, or different ways of perceiving others, you are thinking like a stage theorist. A stage is a period of time, perhaps several years, during which a person’s activities (at least in one broad domain) have certain characteristics in common. For example, we could say that in language development, the 4-month-old girl is in a preverbal stage: Among other things, her communications share in common the fact that they do not include talking. As a person moves to a different stage, the common characteristics of behavior change. In other words, a person’s activities have similar qualities within stages but different qualities across stages. Also, after long periods of stability, qualitative shifts in behavior seem to happen relatively quickly. For example, the change from not talking to talking seems abrupt or discontinuous. It tends to happen between 12 and 18 months of age, and once it starts, language use seems to advance very rapidly. A 4-year-old is some-one who communicates primarily by talking; she is clearly in a verbal stage.

The preverbal to verbal example illustrates two features of stage theories. First, they describe development as qualitative or transformational change, like the emergence of a tree from a seed. At each new stage, new forms of behavioral organization are both different from and more complex than the ones at previous stages. Increasing complexity suggests that development has “directionality.” There is a kind of unfolding or emergence of behavioral organization.

Second, they imply periods of relative stability (within stages) and periods of rapid transition (between stages). Metaphorically, development is a staircase. Each new stage lifts a person to a new plateau for some period of time, and then there is another steep rise to another plateau. There seems to be discontinuity in these changes rather than change being a gradual, incremental process. One person might progress through a stage more quickly or slowly than another, but the sequence of stages is usually seen as the same across cultures and contexts, that is, universal. Also, despite the emphasis on qualitative discontinuities between stages, stage theorists argue for functional continuities across stages. That is, the same processes drive the shifts from stage to stage, such as brain maturation and social experience.

Sigmund Freud’s theory of personality development began to have an influence on developmental science in the early 1900s and was among the first to include a description of stages (e.g., Freud, 1905/1989, 1949/1969). Freud’s theory no longer takes center stage in the interpretations favored by most helpers or by developmental scientists. First, there is little evidence for some of the specific proposals in Freud’s theory (Loevinger, 1976). Second, his theory has been criticized for incorporating the gender biases of early 20th-century Austrian culture. Yet, some of Freud’s broad insights are routinely accepted and incorporated into other theories, such as his emphasis on the importance of early family relationships to infants’ emotional life, his notion that some behavior is unconsciously motivated, and his view that internal conflicts can play a primary role in social functioning. Several currently influential theories, like those of Erik Erikson and John Bowlby, incorporated some aspects of Freud’s theories or were developed to contrast with Freud’s ideas. For these reasons, it is important to understand Freud’s theory. Also, his ideas have permeated popular culture, and they influence many of our assumptions about the development of behavior. If we are to make our own implicit assumptions about development explicit, we must understand where they originated and how well the theories that spawned them stand up in the light of scientific investigation.

Freud’s Personality Theory

Sigmund Freud’s psychoanalytic theory both describes the complex functioning of the adult personality and offers an explanation of the processes and progress of its development throughout childhood. To understand any given stage it helps to understand Freud’s view of the fully developed adult.

Id, Ego, and Superego.

According to Freud, the adult personality functions as if there were actually three personalities, or aspects of personality, all potentially in conflict with one another. The first, the id, is the biological self, the source of all psychic energy. Babies are born with an id; the other two aspects of personality develop later. The id blindly pursues the fulfillment of physical needs or “instincts,” such as the hunger drive and the sex drive. It is irrational, driven by the pleasure principle, that is, by the pursuit of gratification. Its function is to keep the individual, and the species, alive, although Freud also proposed that there are inborn aggressive, destructive instincts served by the id.

The ego begins to develop as cognitive and physical skills emerge. In Freud’s view, some psychic energy is invested in these skills, and a rational, realistic self begins to take shape. The id still presses for fulfillment of bodily needs, but the rational ego seeks to meet these needs in sensible ways that take into account all aspects of a situation. For example, if you were hungry, and you saw a child with an ice cream cone, your id might press you to grab the cone away from the child – an instance of blind, immediate pleasure seeking. Of course, stealing ice cream from a child could have negative consequences if someone else saw you do it or if the child reported you to authorities. Unlike your id, your ego would operate on the reality principle, garnering your understanding of the world and of behavioral consequences to devise a more sensible and self-protective approach, such as waiting until you arrive at the ice cream store yourself and paying for an ice cream cone.

The superego is the last of the three aspects of personality to emerge. Psychic energy is invested in this “internalized parent” during the preschool period as children begin to feel guilty if they behave in ways that are inconsistent with parental restrictions. With the superego in place, the ego must now take account not only of instinctual pressures from the id, and of external realities, but also of the superego’s constraints. It must meet the needs of the id without upsetting the superego to avoid the unpleasant anxiety of guilt. In this view, when you choose against stealing a child’s ice cream cone to meet your immediate hunger, your ego is taking account not only of the realistic problems of getting caught but also of the unpleasant feelings that would be generated by the superego.

The Psychosexual Stages.

In Freud’s view, the complexities of the relationships and conflicts that arise among the id, the ego, and the superego are the result of the individual’s experiences during five developmental stages. Freud called these psychosexual stages because he believed that changes in the id and its energy levels initiated each new stage. The term sexual here applies to all biological instincts or drives and their satisfaction, and it can be broadly defined as “sensual.”

For each stage, Freud posited that a disproportionate amount of id energy is invested in drives satisfied through one part of the body. As a result, the pleasure experienced through that body part is especially great during that stage. Children’s experiences satisfying the especially strong needs that emerge at a given stage can influence the development of personality characteristics throughout life. Freud also thought that parents typically play a pivotal role in helping children achieve the satisfaction they need. For example, in the oral stage, corresponding to the first year of life, Freud argued that the mouth is the body part that provides babies with the most pleasure. Eating, drinking and even non-nutritive sucking are presumably more satisfying than at other times of life. A baby’s experiences with feeding and other parenting behaviors are likely to affect her oral pleasure, and could influence how much energy she invests in seeking oral pleasure in the future. Suppose that a mother in the early 20th century believed the parenting advice of “experts” who claimed that nonnutritive sucking is bad for babies. To prevent her baby from sucking her thumb, the mother might tie the baby’s hands to the sides of the crib at night – a practice recommended by the same experts! Freudian theory would predict that such extreme denial of oral pleasure could cause an oral fixation: The girl might grow up to need oral pleasures more than most adults, perhaps leading to overeating, to being especially talkative, or to being a chain smoker. The grown woman might also exhibit this fixation in more subtle ways, maintaining behaviors or feelings in adulthood that are particularly characteristic of babies, such as crying easily or experiencing overwhelming feelings of helplessness. According to Freud, fixations at any stage could be the result of either denial of a child’s needs, as in this example, or overindulgence of those needs. Specific defense mechanisms, such as “reaction formation” or “repression,” can also be associated with the conflicts that arise at a particular stage.

In Table 1.1, you will find a summary of the basic characteristics of Freud’s five psychosexual stages. Some of these stages will be described in more detail in later chapters. Freud’s stages have many of the properties of critical (or sensitive) periods for personality development. That is, they are time frames during which certain developments must occur. Freud’s third stage, for example, provides an opportunity for sex typing and moral processes to emerge (see Table 1.1). Notice that Freud assumed that much of personality development occurs before age 5, during the first three stages. This is one of the many ideas from Freud’s theory that has made its way into popular culture, even though modern research clearly does not support this position.

By the mid-1900s, two other major stage theories began to significantly impact the progress of developmental science. The first, by Erik Erikson, was focused on personality development, reshaping some of Freud’s ideas. The second, by Jean Piaget, proposed that there are stage like changes in cognitive processes during childhood and adolescence, especially in rational thinking and problem solving.

TABLE 1.1 Freud's Psychosexual Stages of Development

| Stage | Approximate age | Description |

|---|---|---|

| Oral | Birth to 1 year | Infants develop special relationships with caregivers. Mouth is the source of greatest pleasure. Too much or too little oral satisfaction can cause an "oral fixation", leading to traits that actively (smoking) or symbolically (overdependency) are oral or infantile. |

| Anal | 1 to 3 years | Anal area is the source of greatest pleasure. Harsh or overly indulgent toilet training can cause an "anal fixation", leading to later adult traits that recall this stage, such as being greedy or messy. |

| Phallic | 3 to 5 or 6 years | Genitalia are the source of greatest pleasure. Sexual desire directed towards the opposite-sex parent makes the same-sex parent a rival. Fear of angering the same-sex parent is resolved by identifying with that parent, which explains how children acquire both sex-typed behaviors and moral values. If a child has trouble resolving the emotional upheaval of this stage through identification, sex role development may be deviant or moral character may be weak. |

| Latency | 6 years to puberty | Relatively quiescent period of personality development. Sexual desires are repressed after the turmoil of the last stage. Energy is directed into work and play. There is continued consolidation of the traits laid down in the first three stages. |

| Genital | Puberty through adulthood | At puberty, adult sexual needs become the most important motivators of behavior. The individual seeks to fulfill needs and expend energy in socially acceptable activities, such as work, and through marriage with a partner who will substitute for the early object of desire, the opposite-sex parent. |

Erikson’s Personality Theory

Erik Erikson studied psychoanalytic theory with Anna Freud, Sigmund’s daughter, and later proposed his own theory of personality development (e.g., Erikson, 1950/1963). Like many “neo-Freudians,” Erikson deemphasized the id as the driving force behind all behavior, and he emphasized the more rational processes of the ego. His theory is focused on explaining the psychosocial aspects of behavior: attitudes and feelings toward the self and toward others. Erikson described eight psycho-social stages. The first five correspond to the age periods laid out in Freud’s psychosexual stages, but the last three are adult life stages, reflecting Erikson’s view that personal identity and interpersonal attitudes are continually evolving from birth to death.

The “Eight Stages of Man.”

In each stage, the individual faces a different “crisis” or developmental task (see Chapter 9 for a detailed discussion of Erikson’s concept of crisis). The crisis is initiated, on one hand, by changing characteristics of the person - biological maturation or decline, cognitive changes, advancing (or deteriorating) motor skills – and, on the other hand, by corresponding changes in others’ attitudes, behaviors, and expectations. As in all stage theories, people qualitatively change from stage to stage, and so do the crises or tasks that they confront. In the first stage, infants must resolve the crisis of trust versus mistrust (see Chapter 4). Infants, in their relative helplessness, are “incorporative.” They “take in” what is offered, including not only nourishment but also stimulation, information, affection, and attention. If infants’ needs for such input are met by responsive caregivers, babies begin to trust others, to feel valued and valuable, and to view the world as a safe place. If caregivers are not consistently responsive, infants will fail to establish basic trust or to feel valuable, carrying mistrust with them into the next stage of development, when the 1- to 3-year-old toddler faces the crisis of autonomy versus shame and doubt. Mistrust in others and self will make it more difficult to successfully achieve a sense of autonomy.

The new stage is initiated by the child’s maturing muscular control and emerging cognitive and language skills. Unlike helpless infants, toddlers can learn not only to control their elimination but also to feed and dress themselves, to express their desires with some precision, and to move around the environment without help. The new capacities bring a strong need to practice and perfect the skills that make children feel in control of their own destinies. Caregivers must be sensitive to the child’s need for independence and yet must exercise enough control to keep the child safe and to help the child learn self-control. Failure to strike the right balance may rob children of feelings of autonomy - a sense that “I can do it myself”- and can promote instead either shame or self-doubt.

These first two stages illustrate features of all of Erikson’s stages (see Table 1.2 for a description of all eight stages). First, others’ sensitivity and responsiveness to the individual’s needs create a context for positive psychosocial development. Second, attitudes toward self and toward others emerge together. For example, developing trust in others also means valuing (or trusting) the self. Third, every psychosocial crisis or task involves finding the right balance between positive and negative feelings, with the positive outweighing the negative. Finally, the successful resolution of a crisis at one stage helps smooth the way for successful resolutions of future crises. Unsuccessful resolution at an earlier stage may stall progress and make maladaptive behavior more likely.

TABLE 1.2 Erikson's Psychosocial Stages of Development

| Stage or psychosocial "crisis" | Approximate age | Significant events | Positive outcomes or virtue developed | Negative outcome |

|---|---|---|---|---|

| Trust vs. Mistrust | Birth to 1 year | Child develops a sense that the world is a safe and reliable place because of sensitive caregiving | Hope | Fear and mistrust of others |

| Autonomy vs. Shame & Doubt | 1 to 3 years | Child develops a sense of independence tied to use of new mental and motor skills | Willpower | Self-doubt |

| Initiative vs. Guilt | 3 to 5 or 6 years | Child tries to behave in ways that involve more "grown-up" responsibility and experiments with grown-up roles | Purpose | Guilt over thought and action |

| Industry vs. Inferiority | 6 to 12 years | Child needs to learn important academic skills and compare favorably with peers in school | Competence | Lack of competence |

| Identity vs. Role Confusion | 12 to 20 years | Adolescent must move toward adulthood by making choices about values, vocational goals, etc. | Fidelity | Inability to establish sense of self |

| Intimacy vs. Isolation | Young adulthood | Adult becomes willing to share identity with others and to commit to affiliations and partnerships | Love | Fear of intimacy, distancing |

| Generativity vs. Stagnations | Middle adulthood | Adult wishes to make a contribution to the next generation, to produce, mentor, create something of lasting value, as in the rearing of children or community services or expert work | Care | Self-absorption |

| Ego Integrity vs Despair | Late adulthood | Adult comes to terms with life's successes, failures, and missed opportunities and realizes the dignity of own life | Wisdom | Regret |

Erikson’s personality theory is often more appealing to helping professionals than Freud’s theory. Erikson’s emphasis on the psycho-social aspects of personality focuses attention on precisely the issues that helpers feel they are most often called on to address: feelings and attitudes about self and about others. Also, Erikson assumed that the child or adult is an active, self-organizing individual who needs only the right social context to move in a positive direction. Further, Erikson was himself an optimistic therapist who believed that poorly resolved crises could be resolved more adequately in later stages if the right conditions prevailed. Erikson was sensitive to cultural differences in behavioral development. Finally, developmental researchers frequently find Eriksonian interpretations of behavior useful. Studies of attachment, self-concept, self-esteem, and adolescent identity, among other topics addressed in subsequent chapters, have produced results compatible with some of Erikson’s ideas. (See Chapter 4, Box 4.2 for a biographical sketch of Erikson.)

Piaget’s Cognitive Development Theory

In Jean Piaget’s cognitive development theory, we see the influence of 18th-century philosopher Jean-Jacques Rousseau (e.g., 1762/1948), who argued that children’s reasoning and understanding emerges naturally in stages and that parents and educators can help most by allowing children freedom to explore their environments and by giving them learning experiences that are consistent with their level of ability. Similarly, Piaget outlined stages in the development of cognition, especially logical thinking which he referred to as operational thought (e.g., Inhelder & Piaget, 1955/1958, 1964; Piaget, 1952, 1954). He assumed that normal adults are capable of thinking logically about both concrete and abstract contents but that this capacity evolves in four stages through childhood. Briefly, the first sensorimotor stage , lasting for about 2 years, is characterized by an absence of representational thought (see Chapter 3). Although babies are busy taking in the sensory world, organizing it on the basis of inborn reflexes or patterns, and then responding to their sensations, Piaget believed that they cannot yet symbolically represent their experiences, and so they cannot really reflect on them. This means that young infants do not form mental images or store memories symbolically, and they do not plan their behavior or intentionally act. These capacities emerge between 18 and 24 months, launching the next stage.

Piaget’s second, third, and fourth stages roughly correspond to the preschool, elementary school, and the adolescent-adult years. These stages are named for the kinds of thinking that Piaget believed possible for these age groups. Table 1.3 summarizes each stage briefly, and we will describe the stages more fully in subsequent chapters.

Piaget’s theory is another classic stage model. First, cognitive abilities are qualitatively similar within stages. If we know how a child approaches one kind of task, we should be able to predict her approaches to other kinds of tasks as well. Piaget acknowledged that children might be advanced in one cognitive domain or lag behind in another. For example, an adolescent might show more abstract reasoning about math than about interpersonal matters. These within-stage variations he called décalages. But generally, Piaget expected that a child’s thinking would be organized in similar ways across most domains. Second, even though progress through the stages could move more or less quickly depending on many individual and contextual factors, the stages unfold in an invariant sequence, regardless of context or culture. The simpler patterns of physical or mental activity at one stage become integrated into more complex organizational systems at the next stage(hierarchical integration). Finally, despite the qualitative differences across stages, there are functional similarities or continuities from stage to stage in the ways in which children’s cognitive development proceeds. According to Piaget, developmental progress depends on children’s active engagement with the environment. This active process, which will be described in more detail in Chapter 3, suggests that children (and adults) build knowledge and under-standing in a self-organizing way. They interpret new experiences and information in ways that fit their current ways of understanding even as they make some adjustments to their ways of understanding in the process. Children do not just passively receive information from without and store it “as is.” And, knowledge does not just emerge from within as though preformed. Instead, children actively build their knowledge, using both existing knowledge and new information. This is a constructivist view of development.

Piaget’s ideas about cognitive development were first translated into English in the 1960s and they swept American developmental researchers off their feet. His theory filled the need for an explanation that acknowledged complex qualitative changes in children’s abilities over time, and it launched an era of unprecedented research on all aspects of children’s intellectual functioning that continues today. Although some of the specifics of Piaget’s theory have been challenged by research findings, many researchers, educators, and other helping professionals still find the broad outlines of this theory very useful for organizing their thinking about the kinds of understandings that children of different ages can bring to a problem or social situation. Piaget’s theory also inspired some modern views of cognitive change in adulthood. As you will see in Chapter 11, post-Piagetians have proposed additional stages in the development of logical thinking, hypothesizing that the abstract thinking of the adolescent is transformed during adulthood into a more relativistic kind of logical thinking, partly as a function of adults’ practical experience with the complexity of real-world problems.

TABLE 1.3 Piaget's Cognitive Stages of Development

| Stage | Approximate Age | Description |

|---|---|---|

| Sensorimotor | Birth to 2 years | Through six substages, the source of infants' organized actions gradually shifts. At first, all organized behavior is reflexive-automatically triggered by particular stimuli. By the end of this stage, behavior is guided more by representational thought. |

| Preoperational | 2 to 6 or 7 years | Early representational thought tends to be slow. Thought is "centered", usually focused on one salient piece of information, or aspect of an event, at a time. As a result, thinking is usually not yet logical. |

| Concrete operational | 7 to 11 or 12 years | Thinking has gradually become more rapid and efficient, allowing children to now "decenter", or think about more than one thing at a time. This also allows them to discover logical relationships between/among pieces of information. Their logical thinking is best about information that can be demonstrated in the concrete world. |

| Formal operational | 12 years through adulthood | Logical thinking extends to "formal" or abstract material. Young adolescents can think logically about hypothetical situations, for example. |

Classic Theories and the Major Issues They Raise

Classic theories of development have typically addressed a set of core issues. In our brief review you have been introduced to just a few of these. Is developmental change qualitative (e.g., stage like) or quantitative (e.g., incremental)? Are some developments restricted to certain critical periods in the life cycle or are changes in brain and behavior possible at any time given the appropriate opportunities? Are there important continuities across the life span (in characteristics or change processes) or is everything in flux? Are people actively influencing the course and nature of their own development (self-organizing), or are they passive products of other forces? Which is more important in causing developmental change, nature (heredity) or nurture (environment)? Are there universal developmental trajectories, processes, and changes that are the same in all cultures and historical periods, or is development more specific to place and time?

Classic theorists usually took a stand on one side or the other of these issues, framing them as “either-or” possibilities. However, taking an extreme position does not fit the data we now have available. Contemporary theorists propose that human development is best described by a synthesis of the extremes. The best answer to all of the questions just posed appears to be “Both.”

Developmental researchers acknowledge that both nature and nurture influence most behavioral outcomes, but in the past they have often focused primarily on one or the other, partly because a research enterprise that examines multiple causes at the same time tends to be a massive undertaking. So, based on personal interest, theoretical bias, and practical limitations, developmental researchers have often systematically investigated one kind of cause, setting aside examination of other causes of behavior. Interestingly, what these limited research approaches have accomplished is to establish impressive bodies of evidence, both for the importance of genes and for the importance of the environment!

What theorists and researchers face now is the difficult task of specifying how the two sets of causes work together: Do they have separate effects that “add up,” for example, or do they qualitatively modify each other, creating together, in unique combinations, unique outcomes? Modern multidimensional theories make the latter assumption and evidence is quickly accumulating to support this view. Heredity and environment are interdependent: The same genes operate differently in different environments, and the same environments are experienced differently by individuals with different genetic characteristics. Developmental outcomes are always a function of interplay between genes and environment, and the operation of one cannot even be described adequately without reference to the other. The study of epigenetics, the alteration of gene expression by the environment, has led to a radically new understanding of some mechanisms of gene-environment interaction. Epigenetic changes have long-term, important effects on development, and some epigenetic changes can even be transmitted transgenerationally (Skinner, 2011). In Chapter 2 you will find many examples of this complex interdependence.

"It is astonishing how elements that seem insoluble become soluble when someone listens, how confusions that seem irremediable turn into relatively clear flowing streams when one is heard. I have deeply appreciated the times that I have experienced this sensitive, empathic, concentrated listening."Carl R. Rogers